API Docs improvements (#46)

This commit is contained in:

parent

3183d10dbe

commit

607dbd6ae8

100 changed files with 1306 additions and 9201 deletions

|

|

@ -85,12 +85,18 @@ Sorry for some of them being in German, I'll tranlate them at some point.

|

||||||

|

|

||||||

* [ ] Bauanleitung in die Readme/docs

|

* [ ] Bauanleitung in die Readme/docs

|

||||||

* [x] Auch noch nen "link" zum Featurecreep

|

* [x] Auch noch nen "link" zum Featurecreep

|

||||||

|

* [x] Redocs

|

||||||

|

* [x] Swaggerdocs verbessern

|

||||||

|

* [x] Descriptions in structs

|

||||||

|

* [x] Maxlength specify etc. (see swaggo docs)

|

||||||

|

* [x] Rights

|

||||||

|

* [x] API

|

||||||

* [ ] Anleitung zum Makefile

|

* [ ] Anleitung zum Makefile

|

||||||

* [ ] Struktur erklären

|

* [ ] Struktur erklären

|

||||||

* [ ] Backups

|

|

||||||

* [ ] Deploy in die docs

|

* [ ] Deploy in die docs

|

||||||

* [ ] Docker

|

* [ ] Docker

|

||||||

* [ ] Native (systemd + nginx/apache)

|

* [ ] Native (systemd + nginx/apache)

|

||||||

|

* [ ] Backups

|

||||||

* [ ] Docs aufsetzen

|

* [ ] Docs aufsetzen

|

||||||

|

|

||||||

### Tasks

|

### Tasks

|

||||||

|

|

@ -128,6 +134,10 @@ Sorry for some of them being in German, I'll tranlate them at some point.

|

||||||

* [ ] mgl. zum Emailmaskieren haben (in den Nutzereinstellungen, wenn man seine Email nicht an alle Welt rausposaunen will)

|

* [ ] mgl. zum Emailmaskieren haben (in den Nutzereinstellungen, wenn man seine Email nicht an alle Welt rausposaunen will)

|

||||||

* [ ] Mgl. zum Accountlöschen haben (so richtig krass mit emailverifiezierung und dass alle Privaten Listen gelöscht werden und man alle geteilten entweder wem übertragen muss oder auf privat stellen)

|

* [ ] Mgl. zum Accountlöschen haben (so richtig krass mit emailverifiezierung und dass alle Privaten Listen gelöscht werden und man alle geteilten entweder wem übertragen muss oder auf privat stellen)

|

||||||

* [ ] /info endpoint, in dem dann zb die limits und version etc steht

|

* [ ] /info endpoint, in dem dann zb die limits und version etc steht

|

||||||

|

* [ ] Deprecate /namespaces/{id}/lists in favour of namespace.ReadOne() <-- should also return the lists

|

||||||

|

* [ ] Description of web.HTTPError

|

||||||

|

* [ ] Rights methods should return errors

|

||||||

|

* [ ] Re-check all `{List|Namespace}{User|Team}` if really all parameters need to be exposed via json or are overwritten via param anyway.

|

||||||

|

|

||||||

### Linters

|

### Linters

|

||||||

|

|

||||||

|

|

|

||||||

1

Makefile

1

Makefile

|

|

@ -166,6 +166,7 @@ do-the-swag:

|

||||||

# Fix the generated swagger file, currently a workaround until swaggo can properly use go mod

|

# Fix the generated swagger file, currently a workaround until swaggo can properly use go mod

|

||||||

sed -i '/"definitions": {/a "code.vikunja.io.web.HTTPError": {"type": "object","properties": {"code": {"type": "integer"},"message": {"type": "string"}}},' docs/docs.go;

|

sed -i '/"definitions": {/a "code.vikunja.io.web.HTTPError": {"type": "object","properties": {"code": {"type": "integer"},"message": {"type": "string"}}},' docs/docs.go;

|

||||||

sed -i 's/code.vikunja.io\/web.HTTPError/code.vikunja.io.web.HTTPError/g' docs/docs.go;

|

sed -i 's/code.vikunja.io\/web.HTTPError/code.vikunja.io.web.HTTPError/g' docs/docs.go;

|

||||||

|

sed -i 's/` + \\"`\\" + `/` + "`" + `/g' docs/docs.go; # Replace replacements

|

||||||

|

|

||||||

.PHONY: misspell-check

|

.PHONY: misspell-check

|

||||||

misspell-check:

|

misspell-check:

|

||||||

|

|

|

||||||

9

docs/api.md

Normal file

9

docs/api.md

Normal file

|

|

@ -0,0 +1,9 @@

|

||||||

|

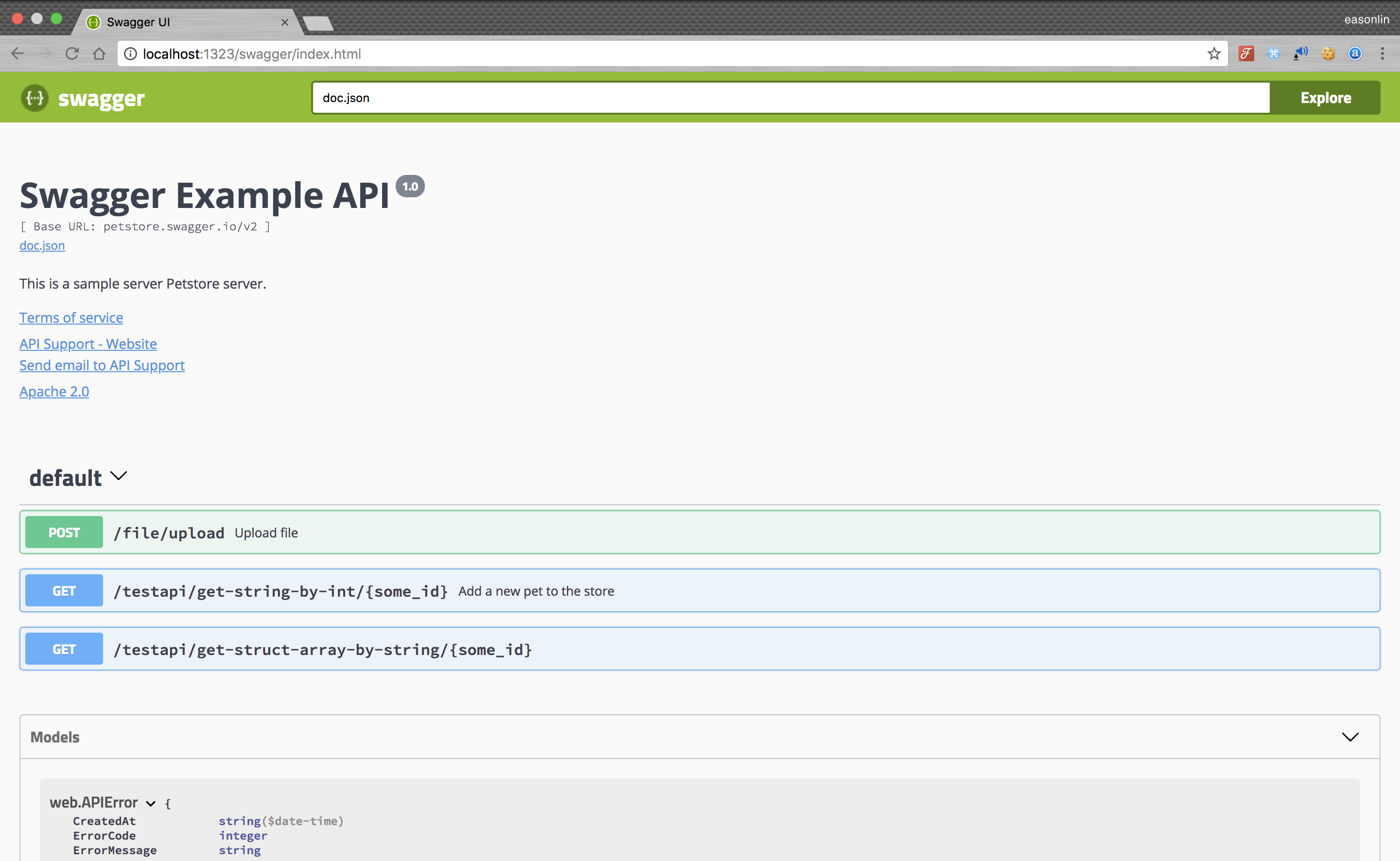

# API Documentation

|

||||||

|

|

||||||

|

You can find the api docs under `http://vikunja.tld/api/v1/docs` of your instance.

|

||||||

|

A public instance is available on [try.vikunja.io](http://try.vikunja.io/api/v1/docs).

|

||||||

|

|

||||||

|

These docs are autgenerated from annotations in the code with swagger.

|

||||||

|

|

||||||

|

The specification is hosted at `http://vikunja.tld/api/v1/docs.json`.

|

||||||

|

You can use this to embed it into other openapi compatible applications if you want.

|

||||||

384

docs/docs.go

384

docs/docs.go

File diff suppressed because it is too large

Load diff

19

docs/rights.md

Normal file

19

docs/rights.md

Normal file

|

|

@ -0,0 +1,19 @@

|

||||||

|

# List and namespace rights for teams and users

|

||||||

|

|

||||||

|

Whenever you share a list or namespace with a user or team, you can specify a `rights` parameter.

|

||||||

|

This parameter controls the rights that team or user is going to have (or has, if you request the current sharing status).

|

||||||

|

|

||||||

|

Rights are being specified using integers.

|

||||||

|

|

||||||

|

The following values are possible:

|

||||||

|

|

||||||

|

| Right (int) | Meaning |

|

||||||

|

|-------------|---------|

|

||||||

|

| 0 (Default) | Read only. Anything which is shared with this right cannot be edited. |

|

||||||

|

| 1 | Read and write. Namespaces or lists shared with this right can be read and written to by the team or user. |

|

||||||

|

| 2 | Admin. Can do anything like read and write, but can additionally manage sharing options. |

|

||||||

|

|

||||||

|

### Team admins

|

||||||

|

|

||||||

|

When adding or querying a team, every member has an additional boolean value stating if it is admin or not.

|

||||||

|

A team admin can also add and remove team members and also change whether a user in the team is admin or not.

|

||||||

File diff suppressed because it is too large

Load diff

File diff suppressed because it is too large

Load diff

5

go.mod

5

go.mod

|

|

@ -26,7 +26,7 @@ require (

|

||||||

github.com/dgrijalva/jwt-go v3.2.0+incompatible

|

github.com/dgrijalva/jwt-go v3.2.0+incompatible

|

||||||

github.com/fzipp/gocyclo v0.0.0-20150627053110-6acd4345c835

|

github.com/fzipp/gocyclo v0.0.0-20150627053110-6acd4345c835

|

||||||

github.com/garyburd/redigo v1.6.0 // indirect

|

github.com/garyburd/redigo v1.6.0 // indirect

|

||||||

github.com/ghodss/yaml v1.0.0 // indirect

|

github.com/ghodss/yaml v1.0.0

|

||||||

github.com/go-openapi/spec v0.17.2 // indirect

|

github.com/go-openapi/spec v0.17.2 // indirect

|

||||||

github.com/go-openapi/swag v0.17.2 // indirect

|

github.com/go-openapi/swag v0.17.2 // indirect

|

||||||

github.com/go-redis/redis v6.14.2+incompatible

|

github.com/go-redis/redis v6.14.2+incompatible

|

||||||

|

|

@ -54,9 +54,6 @@ require (

|

||||||

github.com/prometheus/client_golang v0.9.2

|

github.com/prometheus/client_golang v0.9.2

|

||||||

github.com/spf13/viper v1.2.0

|

github.com/spf13/viper v1.2.0

|

||||||

github.com/stretchr/testify v1.2.2

|

github.com/stretchr/testify v1.2.2

|

||||||

github.com/swaggo/echo-swagger v0.0.0-20180315045949-97f46bb9e5a5

|

|

||||||

github.com/swaggo/files v0.0.0-20180215091130-49c8a91ea3fa // indirect

|

|

||||||

github.com/swaggo/gin-swagger v1.0.0 // indirect

|

|

||||||

github.com/swaggo/swag v1.4.1-0.20181210033626-0e12fd5eb026

|

github.com/swaggo/swag v1.4.1-0.20181210033626-0e12fd5eb026

|

||||||

github.com/urfave/cli v1.20.0 // indirect

|

github.com/urfave/cli v1.20.0 // indirect

|

||||||

github.com/ziutek/mymysql v1.5.4 // indirect

|

github.com/ziutek/mymysql v1.5.4 // indirect

|

||||||

|

|

|

||||||

6

go.sum

6

go.sum

|

|

@ -138,12 +138,6 @@ github.com/spf13/viper v1.2.0 h1:M4Rzxlu+RgU4pyBRKhKaVN1VeYOm8h2jgyXnAseDgCc=

|

||||||

github.com/spf13/viper v1.2.0/go.mod h1:P4AexN0a+C9tGAnUFNwDMYYZv3pjFuvmeiMyKRaNVlI=

|

github.com/spf13/viper v1.2.0/go.mod h1:P4AexN0a+C9tGAnUFNwDMYYZv3pjFuvmeiMyKRaNVlI=

|

||||||

github.com/stretchr/testify v1.2.2 h1:bSDNvY7ZPG5RlJ8otE/7V6gMiyenm9RtJ7IUVIAoJ1w=

|

github.com/stretchr/testify v1.2.2 h1:bSDNvY7ZPG5RlJ8otE/7V6gMiyenm9RtJ7IUVIAoJ1w=

|

||||||

github.com/stretchr/testify v1.2.2/go.mod h1:a8OnRcib4nhh0OaRAV+Yts87kKdq0PP7pXfy6kDkUVs=

|

github.com/stretchr/testify v1.2.2/go.mod h1:a8OnRcib4nhh0OaRAV+Yts87kKdq0PP7pXfy6kDkUVs=

|

||||||

github.com/swaggo/echo-swagger v0.0.0-20180315045949-97f46bb9e5a5 h1:yU0aDQpp0Dq4BAu8rrHnVdC6SZS0LceJVLCUCbGasbE=

|

|

||||||

github.com/swaggo/echo-swagger v0.0.0-20180315045949-97f46bb9e5a5/go.mod h1:mGVJdredle61MBSrJEnaLjKYU0qXJ5V5aNsBgypcUCY=

|

|

||||||

github.com/swaggo/files v0.0.0-20180215091130-49c8a91ea3fa h1:194s4modF+3X3POBfGHFCl9LHGjqzWhB/aUyfRiruZU=

|

|

||||||

github.com/swaggo/files v0.0.0-20180215091130-49c8a91ea3fa/go.mod h1:gxQT6pBGRuIGunNf/+tSOB5OHvguWi8Tbt82WOkf35E=

|

|

||||||

github.com/swaggo/gin-swagger v1.0.0 h1:k6Nn1jV49u+SNIWt7kejQS/iENZKZVMCNQrKOYatNF8=

|

|

||||||

github.com/swaggo/gin-swagger v1.0.0/go.mod h1:Mt37wE46iUaTAOv+HSnHbJYssKGqbS25X19lNF4YpBo=

|

|

||||||

github.com/swaggo/swag v1.4.1-0.20181210033626-0e12fd5eb026 h1:XAOjF3QgjDUkVrPMO4rYvNptSHQgUlHwQsEdJOTxHQ8=

|

github.com/swaggo/swag v1.4.1-0.20181210033626-0e12fd5eb026 h1:XAOjF3QgjDUkVrPMO4rYvNptSHQgUlHwQsEdJOTxHQ8=

|

||||||

github.com/swaggo/swag v1.4.1-0.20181210033626-0e12fd5eb026/go.mod h1:hog2WgeMOrQ/LvQ+o1YGTeT+vWVrbi0SiIslBtxKTyM=

|

github.com/swaggo/swag v1.4.1-0.20181210033626-0e12fd5eb026/go.mod h1:hog2WgeMOrQ/LvQ+o1YGTeT+vWVrbi0SiIslBtxKTyM=

|

||||||

github.com/urfave/cli v1.20.0 h1:fDqGv3UG/4jbVl/QkFwEdddtEDjh/5Ov6X+0B/3bPaw=

|

github.com/urfave/cli v1.20.0 h1:fDqGv3UG/4jbVl/QkFwEdddtEDjh/5Ov6X+0B/3bPaw=

|

||||||

|

|

|

||||||

|

|

@ -24,6 +24,7 @@ import (

|

||||||

|

|

||||||

// BulkTask is the definition of a bulk update task

|

// BulkTask is the definition of a bulk update task

|

||||||

type BulkTask struct {

|

type BulkTask struct {

|

||||||

|

// A list of task ids to update

|

||||||

IDs []int64 `json:"task_ids"`

|

IDs []int64 `json:"task_ids"`

|

||||||

Tasks []*ListTask `json:"-"`

|

Tasks []*ListTask `json:"-"`

|

||||||

ListTask

|

ListTask

|

||||||

|

|

@ -73,7 +74,7 @@ func (bt *BulkTask) CanUpdate(a web.Auth) bool {

|

||||||

// @tags task

|

// @tags task

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param task body models.BulkTask true "The task object. Looks like a normal task, the only difference is it uses an array of list_ids to update."

|

// @Param task body models.BulkTask true "The task object. Looks like a normal task, the only difference is it uses an array of list_ids to update."

|

||||||

// @Success 200 {object} models.ListTask "The updated task object."

|

// @Success 200 {object} models.ListTask "The updated task object."

|

||||||

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid task object provided."

|

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid task object provided."

|

||||||

|

|

|

||||||

|

|

@ -22,15 +22,22 @@ import (

|

||||||

|

|

||||||

// Label represents a label

|

// Label represents a label

|

||||||

type Label struct {

|

type Label struct {

|

||||||

|

// The unique, numeric id of this label.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"label"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"label"`

|

||||||

Title string `xorm:"varchar(250) not null" json:"title" valid:"runelength(3|250)"`

|

// The title of the lable. You'll see this one on tasks associated with it.

|

||||||

Description string `xorm:"varchar(250)" json:"description" valid:"runelength(0|250)"`

|

Title string `xorm:"varchar(250) not null" json:"title" valid:"runelength(3|250)" minLength:"3" maxLength:"250"`

|

||||||

HexColor string `xorm:"varchar(6)" json:"hex_color" valid:"runelength(0|6)"`

|

// The label description.

|

||||||

|

Description string `xorm:"varchar(250)" json:"description" valid:"runelength(0|250)" maxLength:"250"`

|

||||||

|

// The color this label has

|

||||||

|

HexColor string `xorm:"varchar(6)" json:"hex_color" valid:"runelength(0|6)" maxLength:"6"`

|

||||||

|

|

||||||

CreatedByID int64 `xorm:"int(11) not null" json:"-"`

|

CreatedByID int64 `xorm:"int(11) not null" json:"-"`

|

||||||

|

// The user who created this label

|

||||||

CreatedBy *User `xorm:"-" json:"created_by"`

|

CreatedBy *User `xorm:"-" json:"created_by"`

|

||||||

|

|

||||||

|

// A unix timestamp when this label was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

// A unix timestamp when this label was last updated. You cannot change this value.

|

||||||

Updated int64 `xorm:"updated" json:"updated"`

|

Updated int64 `xorm:"updated" json:"updated"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

@ -44,9 +51,12 @@ func (Label) TableName() string {

|

||||||

|

|

||||||

// LabelTask represents a relation between a label and a task

|

// LabelTask represents a relation between a label and a task

|

||||||

type LabelTask struct {

|

type LabelTask struct {

|

||||||

|

// The unique, numeric id of this label.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id"`

|

||||||

TaskID int64 `xorm:"int(11) INDEX not null" json:"-" param:"listtask"`

|

TaskID int64 `xorm:"int(11) INDEX not null" json:"-" param:"listtask"`

|

||||||

|

// The label id you want to associate with a task.

|

||||||

LabelID int64 `xorm:"int(11) INDEX not null" json:"label_id" param:"label"`

|

LabelID int64 `xorm:"int(11) INDEX not null" json:"label_id" param:"label"`

|

||||||

|

// A unix timestamp when this task was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

|

||||||

|

|

@ -24,7 +24,7 @@ import "code.vikunja.io/web"

|

||||||

// @tags labels

|

// @tags labels

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param label body models.Label true "The label object"

|

// @Param label body models.Label true "The label object"

|

||||||

// @Success 200 {object} models.Label "The created label object."

|

// @Success 200 {object} models.Label "The created label object."

|

||||||

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid label object provided."

|

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid label object provided."

|

||||||

|

|

@ -49,7 +49,7 @@ func (l *Label) Create(a web.Auth) (err error) {

|

||||||

// @tags labels

|

// @tags labels

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Label ID"

|

// @Param id path int true "Label ID"

|

||||||

// @Param label body models.Label true "The label object"

|

// @Param label body models.Label true "The label object"

|

||||||

// @Success 200 {object} models.Label "The created label object."

|

// @Success 200 {object} models.Label "The created label object."

|

||||||

|

|

@ -74,7 +74,7 @@ func (l *Label) Update() (err error) {

|

||||||

// @tags labels

|

// @tags labels

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Label ID"

|

// @Param id path int true "Label ID"

|

||||||

// @Success 200 {object} models.Label "The label was successfully deleted."

|

// @Success 200 {object} models.Label "The label was successfully deleted."

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "Not allowed to delete the label."

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "Not allowed to delete the label."

|

||||||

|

|

|

||||||

|

|

@ -29,7 +29,7 @@ import (

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search labels by label text."

|

// @Param s query string false "Search labels by label text."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.Label "The labels"

|

// @Success 200 {array} models.Label "The labels"

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

// @Router /labels [get]

|

// @Router /labels [get]

|

||||||

|

|

@ -55,7 +55,7 @@ func (l *Label) ReadAll(search string, a web.Auth, page int) (ls interface{}, er

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Param id path int true "Label ID"

|

// @Param id path int true "Label ID"

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {object} models.Label "The label"

|

// @Success 200 {object} models.Label "The label"

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to the label"

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to the label"

|

||||||

// @Failure 404 {object} code.vikunja.io/web.HTTPError "Label not found"

|

// @Failure 404 {object} code.vikunja.io/web.HTTPError "Label not found"

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import (

|

||||||

// @tags labels

|

// @tags labels

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param task path int true "Task ID"

|

// @Param task path int true "Task ID"

|

||||||

// @Param label path int true "Label ID"

|

// @Param label path int true "Label ID"

|

||||||

// @Success 200 {object} models.Label "The label was successfully removed."

|

// @Success 200 {object} models.Label "The label was successfully removed."

|

||||||

|

|

@ -46,7 +46,7 @@ func (l *LabelTask) Delete() (err error) {

|

||||||

// @tags labels

|

// @tags labels

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param task path int true "Task ID"

|

// @Param task path int true "Task ID"

|

||||||

// @Param label body models.Label true "The label object"

|

// @Param label body models.Label true "The label object"

|

||||||

// @Success 200 {object} models.Label "The created label relation object."

|

// @Success 200 {object} models.Label "The created label relation object."

|

||||||

|

|

@ -79,7 +79,7 @@ func (l *LabelTask) Create(a web.Auth) (err error) {

|

||||||

// @Param task path int true "Task ID"

|

// @Param task path int true "Task ID"

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search labels by label text."

|

// @Param s query string false "Search labels by label text."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.Label "The labels"

|

// @Success 200 {array} models.Label "The labels"

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

// @Router /tasks/{task}/labels [get]

|

// @Router /tasks/{task}/labels [get]

|

||||||

|

|

|

||||||

|

|

@ -22,16 +22,23 @@ import (

|

||||||

|

|

||||||

// List represents a list of tasks

|

// List represents a list of tasks

|

||||||

type List struct {

|

type List struct {

|

||||||

|

// The unique, numeric id of this list.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"list"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"list"`

|

||||||

Title string `xorm:"varchar(250)" json:"title" valid:"required,runelength(3|250)"`

|

// The title of the list. You'll see this in the namespace overview.

|

||||||

Description string `xorm:"varchar(1000)" json:"description" valid:"runelength(0|1000)"`

|

Title string `xorm:"varchar(250)" json:"title" valid:"required,runelength(3|250)" minLength:"3" maxLength:"250"`

|

||||||

|

// The description of the list.

|

||||||

|

Description string `xorm:"varchar(1000)" json:"description" valid:"runelength(0|1000)" maxLength:"1000"`

|

||||||

OwnerID int64 `xorm:"int(11) INDEX" json:"-"`

|

OwnerID int64 `xorm:"int(11) INDEX" json:"-"`

|

||||||

NamespaceID int64 `xorm:"int(11) INDEX" json:"-" param:"namespace"`

|

NamespaceID int64 `xorm:"int(11) INDEX" json:"-" param:"namespace"`

|

||||||

|

|

||||||

|

// The user who created this list.

|

||||||

Owner User `xorm:"-" json:"owner" valid:"-"`

|

Owner User `xorm:"-" json:"owner" valid:"-"`

|

||||||

|

// An array of tasks which belong to the list.

|

||||||

Tasks []*ListTask `xorm:"-" json:"tasks"`

|

Tasks []*ListTask `xorm:"-" json:"tasks"`

|

||||||

|

|

||||||

|

// A unix timestamp when this list was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

// A unix timestamp when this list was last updated. You cannot change this value.

|

||||||

Updated int64 `xorm:"updated" json:"updated"`

|

Updated int64 `xorm:"updated" json:"updated"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

@ -71,7 +78,7 @@ func GetListsByNamespaceID(nID int64, doer *User) (lists []*List, err error) {

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search lists by title."

|

// @Param s query string false "Search lists by title."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.List "The lists"

|

// @Success 200 {array} models.List "The lists"

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to the list"

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to the list"

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

|

|

@ -99,7 +106,7 @@ func (l *List) ReadAll(search string, a web.Auth, page int) (interface{}, error)

|

||||||

// @tags list

|

// @tags list

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Success 200 {object} models.List "The list"

|

// @Success 200 {object} models.List "The list"

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to the list"

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to the list"

|

||||||

|

|

|

||||||

|

|

@ -59,7 +59,7 @@ func CreateOrUpdateList(list *List) (err error) {

|

||||||

// @tags list

|

// @tags list

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Param list body models.List true "The list with updated values you want to update."

|

// @Param list body models.List true "The list with updated values you want to update."

|

||||||

// @Success 200 {object} models.List "The updated list."

|

// @Success 200 {object} models.List "The updated list."

|

||||||

|

|

@ -82,7 +82,7 @@ func (l *List) Update() (err error) {

|

||||||

// @tags list

|

// @tags list

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param namespaceID path int true "Namespace ID"

|

// @Param namespaceID path int true "Namespace ID"

|

||||||

// @Param list body models.List true "The list you want to create."

|

// @Param list body models.List true "The list you want to create."

|

||||||

// @Success 200 {object} models.List "The created list."

|

// @Success 200 {object} models.List "The created list."

|

||||||

|

|

|

||||||

|

|

@ -26,7 +26,7 @@ import (

|

||||||

// @Description Delets a list

|

// @Description Delets a list

|

||||||

// @tags list

|

// @tags list

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Success 200 {object} models.Message "The list was successfully deleted."

|

// @Success 200 {object} models.Message "The list was successfully deleted."

|

||||||

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid list object provided."

|

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid list object provided."

|

||||||

|

|

|

||||||

|

|

@ -32,7 +32,7 @@ const (

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search tasks by task text."

|

// @Param s query string false "Search tasks by task text."

|

||||||

// @Param sortby path string true "The sorting parameter. Possible values to sort by are priority, prioritydesc, priorityasc, dueadate, dueadatedesc, dueadateasc."

|

// @Param sortby path string true "The sorting parameter. Possible values to sort by are priority, prioritydesc, priorityasc, dueadate, dueadatedesc, dueadateasc."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.List "The tasks"

|

// @Success 200 {array} models.List "The tasks"

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

// @Router /tasks/all/{sortby} [get]

|

// @Router /tasks/all/{sortby} [get]

|

||||||

|

|

@ -51,7 +51,7 @@ func dummy() {

|

||||||

// @Param sortby path string true "The sorting parameter. Possible values to sort by are priority, prioritydesc, priorityasc, dueadate, dueadatedesc, dueadateasc."

|

// @Param sortby path string true "The sorting parameter. Possible values to sort by are priority, prioritydesc, priorityasc, dueadate, dueadatedesc, dueadateasc."

|

||||||

// @Param startdate path string true "The start date parameter. Expects a unix timestamp."

|

// @Param startdate path string true "The start date parameter. Expects a unix timestamp."

|

||||||

// @Param enddate path string true "The end date parameter. Expects a unix timestamp."

|

// @Param enddate path string true "The end date parameter. Expects a unix timestamp."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.List "The tasks"

|

// @Success 200 {array} models.List "The tasks"

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

// @Router /tasks/all/{sortby}/{startdate}/{enddate} [get]

|

// @Router /tasks/all/{sortby}/{startdate}/{enddate} [get]

|

||||||

|

|

@ -67,7 +67,7 @@ func dummy2() {

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search tasks by task text."

|

// @Param s query string false "Search tasks by task text."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.List "The tasks"

|

// @Success 200 {array} models.List "The tasks"

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

// @Router /tasks/all [get]

|

// @Router /tasks/all [get]

|

||||||

|

|

|

||||||

|

|

@ -23,31 +23,48 @@ import (

|

||||||

|

|

||||||

// ListTask represents an task in a todolist

|

// ListTask represents an task in a todolist

|

||||||

type ListTask struct {

|

type ListTask struct {

|

||||||

|

// The unique, numeric id of this task.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"listtask"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"listtask"`

|

||||||

Text string `xorm:"varchar(250)" json:"text" valid:"runelength(3|250)"`

|

// The task text. This is what you'll see in the list.

|

||||||

Description string `xorm:"varchar(250)" json:"description" valid:"runelength(0|250)"`

|

Text string `xorm:"varchar(250)" json:"text" valid:"runelength(3|250)" minLength:"3" maxLength:"250"`

|

||||||

|

// The task description.

|

||||||

|

Description string `xorm:"varchar(250)" json:"description" valid:"runelength(0|250)" maxLength:"250"`

|

||||||

Done bool `xorm:"INDEX" json:"done"`

|

Done bool `xorm:"INDEX" json:"done"`

|

||||||

|

// A unix timestamp when the task is due.

|

||||||

DueDateUnix int64 `xorm:"int(11) INDEX" json:"dueDate"`

|

DueDateUnix int64 `xorm:"int(11) INDEX" json:"dueDate"`

|

||||||

|

// An array of unix timestamps when the user wants to be reminded of the task.

|

||||||

RemindersUnix []int64 `xorm:"JSON TEXT" json:"reminderDates"`

|

RemindersUnix []int64 `xorm:"JSON TEXT" json:"reminderDates"`

|

||||||

CreatedByID int64 `xorm:"int(11)" json:"-"` // ID of the user who put that task on the list

|

CreatedByID int64 `xorm:"int(11)" json:"-"` // ID of the user who put that task on the list

|

||||||

|

// The list this task belongs to.

|

||||||

ListID int64 `xorm:"int(11) INDEX" json:"listID" param:"list"`

|

ListID int64 `xorm:"int(11) INDEX" json:"listID" param:"list"`

|

||||||

|

// An amount in seconds this task repeats itself. If this is set, when marking the task as done, it will mark itself as "undone" and then increase all remindes and the due date by its amount.

|

||||||

RepeatAfter int64 `xorm:"int(11) INDEX" json:"repeatAfter"`

|

RepeatAfter int64 `xorm:"int(11) INDEX" json:"repeatAfter"`

|

||||||

|

// If the task is a subtask, this is the id of its parent.

|

||||||

ParentTaskID int64 `xorm:"int(11) INDEX" json:"parentTaskID"`

|

ParentTaskID int64 `xorm:"int(11) INDEX" json:"parentTaskID"`

|

||||||

|

// The task priority. Can be anything you want, it is possible to sort by this later.

|

||||||

Priority int64 `xorm:"int(11)" json:"priority"`

|

Priority int64 `xorm:"int(11)" json:"priority"`

|

||||||

|

// When this task starts.

|

||||||

StartDateUnix int64 `xorm:"int(11) INDEX" json:"startDate"`

|

StartDateUnix int64 `xorm:"int(11) INDEX" json:"startDate"`

|

||||||

|

// When this task ends.

|

||||||

EndDateUnix int64 `xorm:"int(11) INDEX" json:"endDate"`

|

EndDateUnix int64 `xorm:"int(11) INDEX" json:"endDate"`

|

||||||

|

// An array of users who are assigned to this task

|

||||||

Assignees []*User `xorm:"-" json:"assignees"`

|

Assignees []*User `xorm:"-" json:"assignees"`

|

||||||

|

// An array of labels which are associated with this task.

|

||||||

Labels []*Label `xorm:"-" json:"labels"`

|

Labels []*Label `xorm:"-" json:"labels"`

|

||||||

|

|

||||||

Sorting string `xorm:"-" json:"-" param:"sort"` // Parameter to sort by

|

Sorting string `xorm:"-" json:"-" param:"sort"` // Parameter to sort by

|

||||||

StartDateSortUnix int64 `xorm:"-" json:"-" param:"startdatefilter"`

|

StartDateSortUnix int64 `xorm:"-" json:"-" param:"startdatefilter"`

|

||||||

EndDateSortUnix int64 `xorm:"-" json:"-" param:"enddatefilter"`

|

EndDateSortUnix int64 `xorm:"-" json:"-" param:"enddatefilter"`

|

||||||

|

|

||||||

|

// An array of subtasks.

|

||||||

Subtasks []*ListTask `xorm:"-" json:"subtasks"`

|

Subtasks []*ListTask `xorm:"-" json:"subtasks"`

|

||||||

|

|

||||||

|

// A unix timestamp when this task was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

// A unix timestamp when this task was last updated. You cannot change this value.

|

||||||

Updated int64 `xorm:"updated" json:"updated"`

|

Updated int64 `xorm:"updated" json:"updated"`

|

||||||

|

|

||||||

|

// The user who initially created the task.

|

||||||

CreatedBy User `xorm:"-" json:"createdBy" valid:"-"`

|

CreatedBy User `xorm:"-" json:"createdBy" valid:"-"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

|

||||||

|

|

@ -28,7 +28,7 @@ import (

|

||||||

// @tags task

|

// @tags task

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Param task body models.ListTask true "The task object"

|

// @Param task body models.ListTask true "The task object"

|

||||||

// @Success 200 {object} models.ListTask "The created task object."

|

// @Success 200 {object} models.ListTask "The created task object."

|

||||||

|

|

@ -81,7 +81,7 @@ func (t *ListTask) Create(a web.Auth) (err error) {

|

||||||

// @tags task

|

// @tags task

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Task ID"

|

// @Param id path int true "Task ID"

|

||||||

// @Param task body models.ListTask true "The task object"

|

// @Param task body models.ListTask true "The task object"

|

||||||

// @Success 200 {object} models.ListTask "The updated task object."

|

// @Success 200 {object} models.ListTask "The updated task object."

|

||||||

|

|

|

||||||

|

|

@ -26,7 +26,7 @@ import (

|

||||||

// @Description Deletes a task from a list. This does not mean "mark it done".

|

// @Description Deletes a task from a list. This does not mean "mark it done".

|

||||||

// @tags task

|

// @tags task

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Task ID"

|

// @Param id path int true "Task ID"

|

||||||

// @Success 200 {object} models.Message "The created task object."

|

// @Success 200 {object} models.Message "The created task object."

|

||||||

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid task ID provided."

|

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid task ID provided."

|

||||||

|

|

|

||||||

|

|

@ -20,12 +20,18 @@ import "code.vikunja.io/web"

|

||||||

|

|

||||||

// ListUser represents a list <-> user relation

|

// ListUser represents a list <-> user relation

|

||||||

type ListUser struct {

|

type ListUser struct {

|

||||||

|

// The unique, numeric id of this list <-> user relation.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"namespace"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"namespace"`

|

||||||

|

// The user id.

|

||||||

UserID int64 `xorm:"int(11) not null INDEX" json:"user_id" param:"user"`

|

UserID int64 `xorm:"int(11) not null INDEX" json:"user_id" param:"user"`

|

||||||

|

// The list id.

|

||||||

ListID int64 `xorm:"int(11) not null INDEX" json:"list_id" param:"list"`

|

ListID int64 `xorm:"int(11) not null INDEX" json:"list_id" param:"list"`

|

||||||

Right UserRight `xorm:"int(11) INDEX" json:"right" valid:"length(0|2)"`

|

// The right this user has. 0 = Read only, 1 = Read & Write, 2 = Admin. See the docs for more details.

|

||||||

|

Right UserRight `xorm:"int(11) INDEX" json:"right" valid:"length(0|2)" maximum:"2" default:"0"`

|

||||||

|

|

||||||

|

// A unix timestamp when this relation was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

// A unix timestamp when this relation was last updated. You cannot change this value.

|

||||||

Updated int64 `xorm:"updated" json:"updated"`

|

Updated int64 `xorm:"updated" json:"updated"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

|

||||||

|

|

@ -24,7 +24,7 @@ import "code.vikunja.io/web"

|

||||||

// @tags sharing

|

// @tags sharing

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Param list body models.ListUser true "The user you want to add to the list."

|

// @Param list body models.ListUser true "The user you want to add to the list."

|

||||||

// @Success 200 {object} models.ListUser "The created user<->list relation."

|

// @Success 200 {object} models.ListUser "The created user<->list relation."

|

||||||

|

|

|

||||||

|

|

@ -23,7 +23,7 @@ import _ "code.vikunja.io/web" // For swaggerdocs generation

|

||||||

// @Description Delets a user from a list. The user won't have access to the list anymore.

|

// @Description Delets a user from a list. The user won't have access to the list anymore.

|

||||||

// @tags sharing

|

// @tags sharing

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param listID path int true "List ID"

|

// @Param listID path int true "List ID"

|

||||||

// @Param userID path int true "User ID"

|

// @Param userID path int true "User ID"

|

||||||

// @Success 200 {object} models.Message "The user was successfully removed from the list."

|

// @Success 200 {object} models.Message "The user was successfully removed from the list."

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import "code.vikunja.io/web"

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search users by its name."

|

// @Param s query string false "Search users by its name."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.UserWithRight "The users with the right they have."

|

// @Success 200 {array} models.UserWithRight "The users with the right they have."

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "No right to see the list."

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "No right to see the list."

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import _ "code.vikunja.io/web" // For swaggerdocs generation

|

||||||

// @Param listID path int true "List ID"

|

// @Param listID path int true "List ID"

|

||||||

// @Param userID path int true "User ID"

|

// @Param userID path int true "User ID"

|

||||||

// @Param list body models.ListUser true "The user you want to update."

|

// @Param list body models.ListUser true "The user you want to update."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {object} models.ListUser "The updated user <-> list relation."

|

// @Success 200 {object} models.ListUser "The updated user <-> list relation."

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have admin-access to the list"

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have admin-access to the list"

|

||||||

// @Failure 404 {object} code.vikunja.io/web.HTTPError "User or list does not exist."

|

// @Failure 404 {object} code.vikunja.io/web.HTTPError "User or list does not exist."

|

||||||

|

|

|

||||||

|

|

@ -18,5 +18,6 @@ package models

|

||||||

|

|

||||||

// Message is a standard message

|

// Message is a standard message

|

||||||

type Message struct {

|

type Message struct {

|

||||||

|

// A standard message.

|

||||||

Message string `json:"message"`

|

Message string `json:"message"`

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -23,14 +23,20 @@ import (

|

||||||

|

|

||||||

// Namespace holds informations about a namespace

|

// Namespace holds informations about a namespace

|

||||||

type Namespace struct {

|

type Namespace struct {

|

||||||

|

// The unique, numeric id of this namespace.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"namespace"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"namespace"`

|

||||||

Name string `xorm:"varchar(250)" json:"name" valid:"required,runelength(5|250)"`

|

// The name of this namespace.

|

||||||

Description string `xorm:"varchar(1000)" json:"description" valid:"runelength(0|250)"`

|

Name string `xorm:"varchar(250)" json:"name" valid:"required,runelength(5|250)" minLength:"5" maxLength:"250"`

|

||||||

|

// The description of the namespace

|

||||||

|

Description string `xorm:"varchar(1000)" json:"description" valid:"runelength(0|250)" maxLength:"250"`

|

||||||

OwnerID int64 `xorm:"int(11) not null INDEX" json:"-"`

|

OwnerID int64 `xorm:"int(11) not null INDEX" json:"-"`

|

||||||

|

|

||||||

|

// The user who owns this namespace

|

||||||

Owner User `xorm:"-" json:"owner" valid:"-"`

|

Owner User `xorm:"-" json:"owner" valid:"-"`

|

||||||

|

|

||||||

|

// A unix timestamp when this namespace was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

// A unix timestamp when this namespace was last updated. You cannot change this value.

|

||||||

Updated int64 `xorm:"updated" json:"updated"`

|

Updated int64 `xorm:"updated" json:"updated"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

@ -88,7 +94,7 @@ func GetNamespaceByID(id int64) (namespace Namespace, err error) {

|

||||||

// @tags namespace

|

// @tags namespace

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Namespace ID"

|

// @Param id path int true "Namespace ID"

|

||||||

// @Success 200 {object} models.Namespace "The Namespace"

|

// @Success 200 {object} models.Namespace "The Namespace"

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to that namespace."

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have access to that namespace."

|

||||||

|

|

@ -113,7 +119,7 @@ type NamespaceWithLists struct {

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search namespaces by name."

|

// @Param s query string false "Search namespaces by name."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.NamespaceWithLists "The Namespaces."

|

// @Success 200 {array} models.NamespaceWithLists "The Namespaces."

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

// @Router /namespaces [get]

|

// @Router /namespaces [get]

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import (

|

||||||

// @tags namespace

|

// @tags namespace

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param namespace body models.Namespace true "The namespace you want to create."

|

// @Param namespace body models.Namespace true "The namespace you want to create."

|

||||||

// @Success 200 {object} models.Namespace "The created namespace."

|

// @Success 200 {object} models.Namespace "The created namespace."

|

||||||

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid namespace object provided."

|

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid namespace object provided."

|

||||||

|

|

|

||||||

|

|

@ -26,7 +26,7 @@ import (

|

||||||

// @Description Delets a namespace

|

// @Description Delets a namespace

|

||||||

// @tags namespace

|

// @tags namespace

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Namespace ID"

|

// @Param id path int true "Namespace ID"

|

||||||

// @Success 200 {object} models.Message "The namespace was successfully deleted."

|

// @Success 200 {object} models.Message "The namespace was successfully deleted."

|

||||||

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid namespace object provided."

|

// @Failure 400 {object} code.vikunja.io/web.HTTPError "Invalid namespace object provided."

|

||||||

|

|

|

||||||

|

|

@ -24,7 +24,7 @@ import _ "code.vikunja.io/web" // For swaggerdocs generation

|

||||||

// @tags namespace

|

// @tags namespace

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Namespace ID"

|

// @Param id path int true "Namespace ID"

|

||||||

// @Param namespace body models.Namespace true "The namespace with updated values you want to update."

|

// @Param namespace body models.Namespace true "The namespace with updated values you want to update."

|

||||||

// @Success 200 {object} models.Namespace "The updated namespace."

|

// @Success 200 {object} models.Namespace "The updated namespace."

|

||||||

|

|

|

||||||

|

|

@ -20,12 +20,18 @@ import "code.vikunja.io/web"

|

||||||

|

|

||||||

// NamespaceUser represents a namespace <-> user relation

|

// NamespaceUser represents a namespace <-> user relation

|

||||||

type NamespaceUser struct {

|

type NamespaceUser struct {

|

||||||

|

// The unique, numeric id of this namespace <-> user relation.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"namespace"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id" param:"namespace"`

|

||||||

|

// The user id.

|

||||||

UserID int64 `xorm:"int(11) not null INDEX" json:"user_id" param:"user"`

|

UserID int64 `xorm:"int(11) not null INDEX" json:"user_id" param:"user"`

|

||||||

|

// The namespace id

|

||||||

NamespaceID int64 `xorm:"int(11) not null INDEX" json:"namespace_id" param:"namespace"`

|

NamespaceID int64 `xorm:"int(11) not null INDEX" json:"namespace_id" param:"namespace"`

|

||||||

Right UserRight `xorm:"int(11) INDEX" json:"right" valid:"length(0|2)"`

|

// The right this user has. 0 = Read only, 1 = Read & Write, 2 = Admin. See the docs for more details.

|

||||||

|

Right UserRight `xorm:"int(11) INDEX" json:"right" valid:"length(0|2)" maximum:"2" default:"0"`

|

||||||

|

|

||||||

|

// A unix timestamp when this relation was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

// A unix timestamp when this relation was last updated. You cannot change this value.

|

||||||

Updated int64 `xorm:"updated" json:"updated"`

|

Updated int64 `xorm:"updated" json:"updated"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

|

||||||

|

|

@ -24,7 +24,7 @@ import "code.vikunja.io/web"

|

||||||

// @tags sharing

|

// @tags sharing

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Namespace ID"

|

// @Param id path int true "Namespace ID"

|

||||||

// @Param namespace body models.NamespaceUser true "The user you want to add to the namespace."

|

// @Param namespace body models.NamespaceUser true "The user you want to add to the namespace."

|

||||||

// @Success 200 {object} models.NamespaceUser "The created user<->namespace relation."

|

// @Success 200 {object} models.NamespaceUser "The created user<->namespace relation."

|

||||||

|

|

|

||||||

|

|

@ -23,7 +23,7 @@ import _ "code.vikunja.io/web" // For swaggerdocs generation

|

||||||

// @Description Delets a user from a namespace. The user won't have access to the namespace anymore.

|

// @Description Delets a user from a namespace. The user won't have access to the namespace anymore.

|

||||||

// @tags sharing

|

// @tags sharing

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param namespaceID path int true "Namespace ID"

|

// @Param namespaceID path int true "Namespace ID"

|

||||||

// @Param userID path int true "user ID"

|

// @Param userID path int true "user ID"

|

||||||

// @Success 200 {object} models.Message "The user was successfully deleted."

|

// @Success 200 {object} models.Message "The user was successfully deleted."

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import "code.vikunja.io/web"

|

||||||

// @Param id path int true "Namespace ID"

|

// @Param id path int true "Namespace ID"

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search users by its name."

|

// @Param s query string false "Search users by its name."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.UserWithRight "The users with the right they have."

|

// @Success 200 {array} models.UserWithRight "The users with the right they have."

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "No right to see the namespace."

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "No right to see the namespace."

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import _ "code.vikunja.io/web" // For swaggerdocs generation

|

||||||

// @Param namespaceID path int true "Namespace ID"

|

// @Param namespaceID path int true "Namespace ID"

|

||||||

// @Param userID path int true "User ID"

|

// @Param userID path int true "User ID"

|

||||||

// @Param namespace body models.NamespaceUser true "The user you want to update."

|

// @Param namespace body models.NamespaceUser true "The user you want to update."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {object} models.NamespaceUser "The updated user <-> namespace relation."

|

// @Success 200 {object} models.NamespaceUser "The updated user <-> namespace relation."

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have admin-access to the namespace"

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have admin-access to the namespace"

|

||||||

// @Failure 404 {object} code.vikunja.io/web.HTTPError "User or namespace does not exist."

|

// @Failure 404 {object} code.vikunja.io/web.HTTPError "User or namespace does not exist."

|

||||||

|

|

|

||||||

|

|

@ -20,12 +20,18 @@ import "code.vikunja.io/web"

|

||||||

|

|

||||||

// TeamList defines the relation between a team and a list

|

// TeamList defines the relation between a team and a list

|

||||||

type TeamList struct {

|

type TeamList struct {

|

||||||

|

// The unique, numeric id of this list <-> team relation.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id"`

|

||||||

|

// The team id.

|

||||||

TeamID int64 `xorm:"int(11) not null INDEX" json:"team_id" param:"team"`

|

TeamID int64 `xorm:"int(11) not null INDEX" json:"team_id" param:"team"`

|

||||||

|

// The list id.

|

||||||

ListID int64 `xorm:"int(11) not null INDEX" json:"list_id" param:"list"`

|

ListID int64 `xorm:"int(11) not null INDEX" json:"list_id" param:"list"`

|

||||||

Right TeamRight `xorm:"int(11) INDEX" json:"right" valid:"length(0|2)"`

|

// The right this team has. 0 = Read only, 1 = Read & Write, 2 = Admin. See the docs for more details.

|

||||||

|

Right TeamRight `xorm:"int(11) INDEX" json:"right" valid:"length(0|2)" maximum:"2" default:"0"`

|

||||||

|

|

||||||

|

// A unix timestamp when this relation was created. You cannot change this value.

|

||||||

Created int64 `xorm:"created" json:"created"`

|

Created int64 `xorm:"created" json:"created"`

|

||||||

|

// A unix timestamp when this relation was last updated. You cannot change this value.

|

||||||

Updated int64 `xorm:"updated" json:"updated"`

|

Updated int64 `xorm:"updated" json:"updated"`

|

||||||

|

|

||||||

web.CRUDable `xorm:"-" json:"-"`

|

web.CRUDable `xorm:"-" json:"-"`

|

||||||

|

|

|

||||||

|

|

@ -24,7 +24,7 @@ import "code.vikunja.io/web"

|

||||||

// @tags sharing

|

// @tags sharing

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Param list body models.TeamList true "The team you want to add to the list."

|

// @Param list body models.TeamList true "The team you want to add to the list."

|

||||||

// @Success 200 {object} models.TeamList "The created team<->list relation."

|

// @Success 200 {object} models.TeamList "The created team<->list relation."

|

||||||

|

|

|

||||||

|

|

@ -23,7 +23,7 @@ import _ "code.vikunja.io/web" // For swaggerdocs generation

|

||||||

// @Description Delets a team from a list. The team won't have access to the list anymore.

|

// @Description Delets a team from a list. The team won't have access to the list anymore.

|

||||||

// @tags sharing

|

// @tags sharing

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param listID path int true "List ID"

|

// @Param listID path int true "List ID"

|

||||||

// @Param teamID path int true "Team ID"

|

// @Param teamID path int true "Team ID"

|

||||||

// @Success 200 {object} models.Message "The team was successfully deleted."

|

// @Success 200 {object} models.Message "The team was successfully deleted."

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import "code.vikunja.io/web"

|

||||||

// @Param id path int true "List ID"

|

// @Param id path int true "List ID"

|

||||||

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

// @Param p query int false "The page number. Used for pagination. If not provided, the first page of results is returned."

|

||||||

// @Param s query string false "Search teams by its name."

|

// @Param s query string false "Search teams by its name."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {array} models.TeamWithRight "The teams with their right."

|

// @Success 200 {array} models.TeamWithRight "The teams with their right."

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "No right to see the list."

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "No right to see the list."

|

||||||

// @Failure 500 {object} models.Message "Internal error"

|

// @Failure 500 {object} models.Message "Internal error"

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ import _ "code.vikunja.io/web" // For swaggerdocs generation

|

||||||

// @Param listID path int true "List ID"

|

// @Param listID path int true "List ID"

|

||||||

// @Param teamID path int true "Team ID"

|

// @Param teamID path int true "Team ID"

|

||||||

// @Param list body models.TeamList true "The team you want to update."

|

// @Param list body models.TeamList true "The team you want to update."

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Success 200 {object} models.TeamList "The updated team <-> list relation."

|

// @Success 200 {object} models.TeamList "The updated team <-> list relation."

|

||||||

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have admin-access to the list"

|

// @Failure 403 {object} code.vikunja.io/web.HTTPError "The user does not have admin-access to the list"

|

||||||

// @Failure 404 {object} code.vikunja.io/web.HTTPError "Team or list does not exist."

|

// @Failure 404 {object} code.vikunja.io/web.HTTPError "Team or list does not exist."

|

||||||

|

|

|

||||||

|

|

@ -24,7 +24,7 @@ import "code.vikunja.io/web"

|

||||||

// @tags team

|

// @tags team

|

||||||

// @Accept json

|

// @Accept json

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Team ID"

|

// @Param id path int true "Team ID"

|

||||||

// @Param team body models.TeamMember true "The user to be added to a team."

|

// @Param team body models.TeamMember true "The user to be added to a team."

|

||||||

// @Success 200 {object} models.TeamMember "The newly created member object"

|

// @Success 200 {object} models.TeamMember "The newly created member object"

|

||||||

|

|

|

||||||

|

|

@ -21,7 +21,7 @@ package models

|

||||||

// @Description Remove a user from a team. This will also revoke any access this user might have via that team.

|

// @Description Remove a user from a team. This will also revoke any access this user might have via that team.

|

||||||

// @tags team

|

// @tags team

|

||||||

// @Produce json

|

// @Produce json

|

||||||

// @Security ApiKeyAuth

|

// @Security JWTKeyAuth

|

||||||

// @Param id path int true "Team ID"

|

// @Param id path int true "Team ID"

|

||||||

// @Param userID path int true "User ID"

|

// @Param userID path int true "User ID"

|

||||||

// @Success 200 {object} models.Message "The user was successfully removed from the team."

|

// @Success 200 {object} models.Message "The user was successfully removed from the team."

|

||||||

|

|

|

||||||

|

|

@ -20,12 +20,18 @@ import "code.vikunja.io/web"

|

||||||

|

|

||||||

// TeamNamespace defines the relationship between a Team and a Namespace

|

// TeamNamespace defines the relationship between a Team and a Namespace

|

||||||

type TeamNamespace struct {

|

type TeamNamespace struct {

|

||||||

|

// The unique, numeric id of this namespace <-> team relation.

|

||||||

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id"`

|

ID int64 `xorm:"int(11) autoincr not null unique pk" json:"id"`

|

||||||

|

// The team id.

|

||||||

TeamID int64 `xorm:"int(11) not null INDEX" json:"team_id" param:"team"`

|

TeamID int64 `xorm:"int(11) not null INDEX" json:"team_id" param:"team"`

|

||||||

|

// The namespace id.

|

||||||

NamespaceID int64 `xorm:"int(11) not null INDEX" json:"namespace_id" param:"namespace"`

|